Jung’s Synchronicity theory is as fascinating as unscientific. It is “unscientific” because it can’t be proven false by its very own definition – two events appear to be related even if the causal relation is missing. Someone talks about dreaming of a Golden Scarab and suddenly a Golden Scarab hits your window. There is no apparent causal relationship, yet the event pair is so unlikely that the Synchronicity idea has been developed around this. My rational mind is more inclined in thinking of this as a selection bias (countless times it happens something like – you spend a few days away in a city and suddenly the news is filled with stories about this city). Nonetheless, when it happens I always feel uneasy, like the Universe would like to have a word with me.

So when I read the tweet below, by Mario Fusco, in a quite specific job timeframe, I felt called out

In my whole professional career, I always tried to push the boundaries of the language(s) I was using, from assembly to C++, to achieve better engineering, more robust and safer code, fewer bug opportunities, simpler development, and improved collaboration. This meant sometimes introducing the latest C++ standard, sometimes introducing concepts from other languages and sometimes defining DSL with the help of the preprocessor. So I am not new to some raise of eyebrows when people look at my code.

Consider the following C++20 code

std::vector<int> process( std::vector<int> const& input ) {

return input

| std::views::filter([](const int n) {return n % 2 == 0; })

| std::views::transform([](const int n) {return n * n; });

}

void foo()

{

std::vector<int> data = {0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10};

auto result = process( data );

// do something with result

}

Up to some years ago, it was far from being idiomatic C++ and the equivalent solution with standard library algorithms would have been clumsier, less readable, less efficient, and possibly more error-prone.

Today this is a perfectly valid C++20 code, but still is not very ingrained in the average C++ programmer, and can be considered non-idiomatic, difficult, or not ready to enter the production codebase, yet.

Though there are plenty of reasons for using it. Here you are the first that come to mind –

- it is composed of highly reusable blocks,

- it decouples each operation from the others

- it offers a clear vision of the dependencies among parts

- it is easily modifiable and composable

Also let’s consider the case that, in advance with respect to the ISO C++ standard (actually not a so difficult stunt), someone wrote a library (it’s just an example) for supporting this dataflow pattern in C++. For sure, using this library in the code base would attract some concerns, not dissimilar from those from using the latest standard.

The reasons are the same – something is new and unfamiliar and asks for some effort to understand and acquire. Every time you introduce something new there is a cost associated with the introduction.

So my claim is that regardless you are introducing a new feature from the latest standard or a library that allows idioms from other languages or paradigms, or define a DSL, you may face some resistance.

But, let’s suppose that by using the FunctoMediaRocket idiom you’ll save 3 months of development time (intended coding, testing, debugging), are you sure you don’t want to use this because the team needs two weeks to learn it?

Or that the CoflatmappedTurboMonoid – no one has ever heard of – lets you cut the number of bugs in half, but requires a month to get the team accustomed?

Consider the following examples.

State Machine DSL

C and C++ happen to be very plastic languages allowing for the definition of simple DSL via the preprocessor macros. I have both good and bad experiences with DSL. In the past, I defined finite state machine helper macros both in Nintendo Z80 assembly and in C. I guess the work was good since, besides earning the title “Knight of the Macros”, the DSL enabled PC programmers to write gameplay behavior in Z80 assembly. I don’t claim the entire merit – my colleagues were very skilled and motivated, but I am inclined in thinking that my DSL was an enabler for this to happen. Z80 code is no longer relevant, but consider the following C source code –

M_STATE( ArcherMummyFsm, Aim )

THIS( ArcherMummy );

M_TRANSITIONS_BEGIN

M_TRANSITION( !CombatActor_canSee( this, GameInstances_getMainActor() ), WaitState )

M_TRANSITION( FrameCounter_isPast( this->timer ), LoadBowState )

M_TRANSITIONS_END

M_ROUTINE_BEGIN

ArcherMummy_SetFacingToMainActor(this);

M_ROUTINE_END

M_ON_STATE_ENTRY_BEGIN

ArcherMummy_SetFacingToMainActor(this);

CombatActor_setNewAction( this, C_ARCH_Action_Wait );

M_ON_STATE_ENTRY_END

M_END_STATE

For sure this code does not resemble standard C, but very clearly defines a state machine state. You immediately understand what outgoing transitions are from this state, and what is executed when the state is entered.

The very purpose of this is to hide the state machine implementation, letting the programmer focus on the higher level without getting distracted by the plumbing. This is the same that C++ routinely does – hide the underlying details. What if you have to debug? Well, this DSL had very few problems and once developed has been used in (at least) three different games with no changes. And this was long before unit tests became a thing.

Monadic Error Handling

After my exposure to Scala, (and in agreement with John Carmack idea) I tried to port some functional programming idioms into the C++. After all, C++ is advertised as a multi-paradigm language capable of supporting functional programming (In this sense, how can a FP approach be considered non-idiomatic?)

return result

.andThen( [counter](){

return counter == InvalidRollingCounter ?

makeError( ErrorCode::CorruptedMemory ) :

ChefLib::Error::Ok;

})

.toEither( static_cast<unsigned>(counter) );

Here is the imperative C++ equivalent –

error = ChefLib::Error::Ok;

if( result == NoError ) {

if( counter == invalidRollingCounter ) {

error = makeError( ErrorCode::CorruptedMemory );

}

}

if( error.isOk() ) {

return std::variant<int,Error>( counter );

}

else {

return std::variant<int,Error>( error );

}

the FP version is shorter and easier to understand once you acquire the basic concepts of andThen and Either. The code and the flow are clear. No one is required to look at how “andThen” is implemented, no more than looking at the std::variant internals is needed to work with it.

A change model

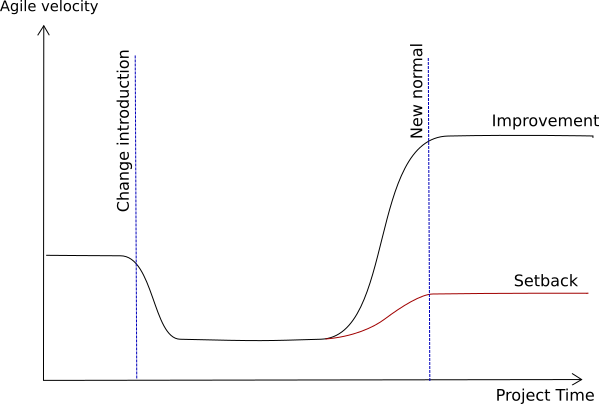

Engineering, software engineering included, is about tradeoffs, In order to show the tradeoff for this case, I’ll make use of the change model curve (sometimes referred to as the Kübler-Ross model).

The team starts with a given productivity (here defined as the agile velocity), when the change is introduced there is a drop in productivity. That’s normal – the team needs to learn and try the new way of doing things, by exploring, studying, trying and get things wrong and fix them. This is a little of a trauma from the team management point of view. When the team acquires the change, then there is another change in productivity.

Two outcomes are possible – the change was a setback, resulting in a velocity lesser than the original one, or there could be an improvement.

So, is it enough to have an improvement to declare a victory or anyway taking the chance of introducing a change?

Well, no. Consider the following diagram that includes the end of the project.

This drawing shows the work done as areas. In light red, right after the introduction of the change, you see the work lost. On the right side, in light green, the area represents the extra work that can be done for “free” thanks to the change. As long as the green area is larger than the red, the change is worth introducing.

Now this is fine and good on paper, but in the Real World, telling when a project is over is very hard unless you are at the very end. Some projects never end, meaning that even after the first release the project is maintained for years.

Also trying to predict quantitatively the performance gain by introducing something in the way of writing code can be hard if not impossible.

Nonetheless giving up on a potential improvement in performance is something I would do only with strong motivations. Such as a very risk-averse or very critical project, where everything is well defined and specified (not the usual context I’ve worked in).

One complaint that I usually heard is that the more unfamiliar the code looks to an expert programmer in a given language, the harder is to find and train new joiners. Let’s split this complaint in two:

- finding candidates for company hiring is not affected by the code quality in most cases. Unless you show your company code to prospective employees, this is not an issue.

- learning a new library, idiom, or DSL, should not be a problem for a programmer. Programming experience can’t be strictly related to a language. It is like to claim that a driver is specialized in Fiat Punto, can’t learn to drive a Renault Clio.

Remarking on this last point, a programmer must be able to learn. It is a learn-or-die job. In this industry nothing stays the same for long: Nothing stays the same for long. Fortran, Cobol, Delphi, and Basic, once mighty players in the programming languages are no more. Assembly was replaced by structured programming; structured programming was overtaken by object-oriented and OO is possibly at sunset. CSV was once king in versioning systems, then subversion came and today git is the de-facto standard. Not to talk about javascript web frontend frameworks that evolve at the speed of light

In other words either you are able to learn or your best hope is to find a maintenance job on a legacy system.

In Pragmatic Programmer, the authors Andrew Hunt and David Thomas encourage the reader to learn a new programming language every year (I couldn’t find the reference in the book, but I heard Andrew Hunt repeating this in an interview on the always excellent corecursive podcast).

The point is that language shapes the mind and new languages give your brain new tools and perspectives to solve problems. Regardless you are going to employ the very same language you learn or not, you are going to shift your designs to include new ideas.

How to change

Once cleared the way on when and why to introduce unidiomatic changes, it is time to take a look at the how.

This is a problem of social acceptance, not of technical acceptance. Programmers, like every other being, have a comfort zone that is … comfortable. Some programmers may be quite opinionated. Moreover, you may have a really hard time forcing decisions from above, the best move is to let programmers themselves buy into the new technology.

From the management point of view, it is never easy to manage programmers (herding cats), been there, done that. You leave too much space and you find yourself with 5 different languages, several experimental tools, and code that could be written by aliens. You enforce too much control and you’ll get C-with-classes code that could have been written in the 90s with all the classical problems the evolution managed to solve.

If you want to keep a healthy mix of innovators and traditionalists, you need to foster collaboration, making sure that fears and desires are respectively understood and acknowledged. Innovators must be able to explain the advantages of new technologies and traditionalists must be able to confront the new with their experience keeping an open mind and provide valuable feedback and then decisions must be evaluated and taken.

How decisions have to be taken is a long story, so I’ll save it for another post. Nonetheless, it is important that decisions have to be taken consciously and not let them just happen.

Conclusions

Technical debt, as defined by Wikipedia, is the cost of future reworks because of a cheap or limited solution. Non idiomatic solutions, do not generally fall into this category. If the solution does not bring a productivity advantage then it has to be reworked, but this is generally true for any change even if idiomatic.

Change and learning are a core part of the programming profession. Programmers may be opinionated or happily settled in their comfort zone. For these reasons, nontrivial change must be well-motivated, analyzed, and managed. The right change at the right time may actually save a doomed project.

I know this is a sensitive topic and I’ll be happy to listen your opinion, don’t be shy and comment 🙂